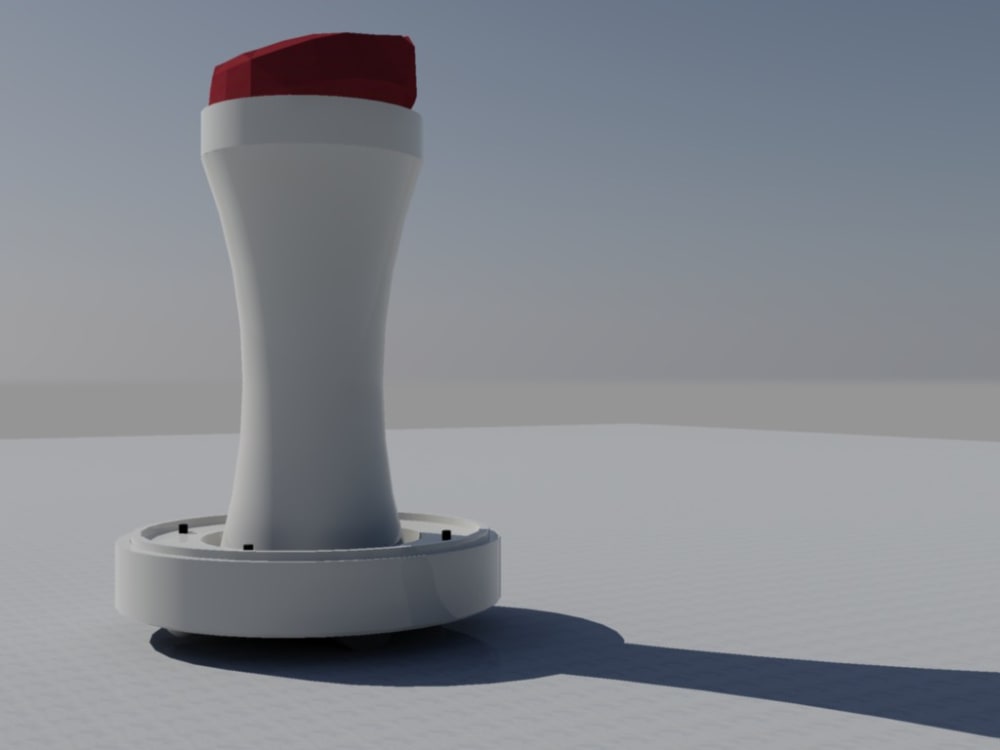

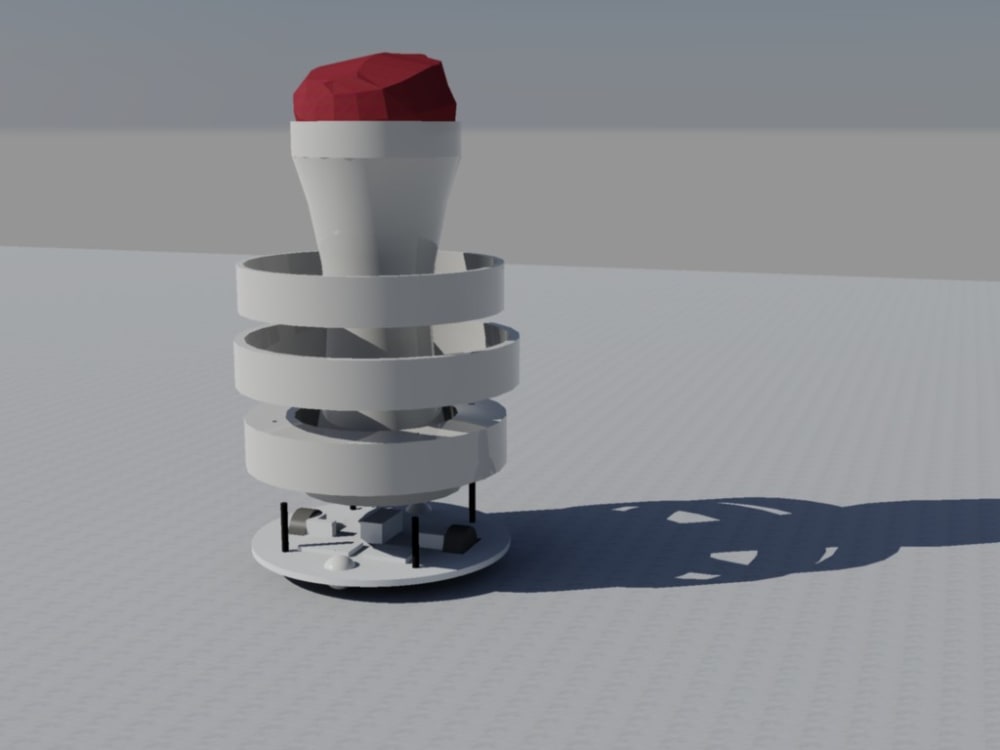

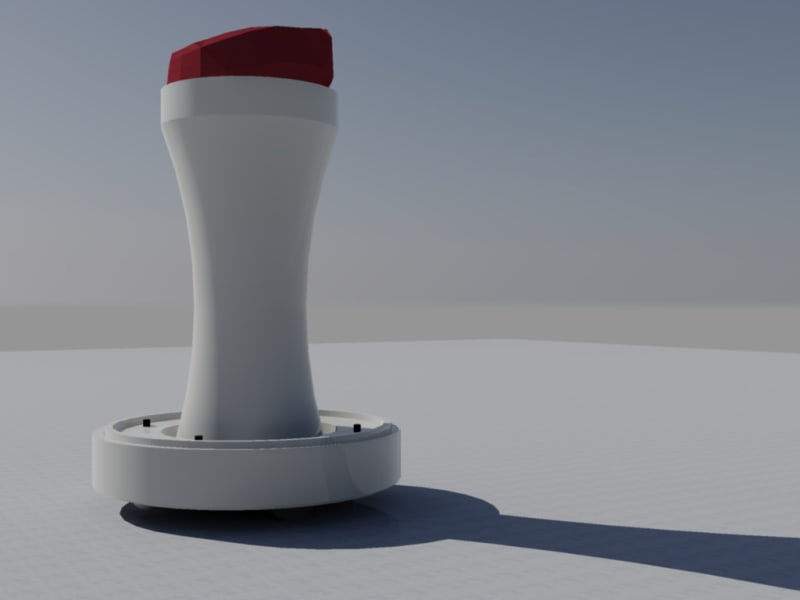

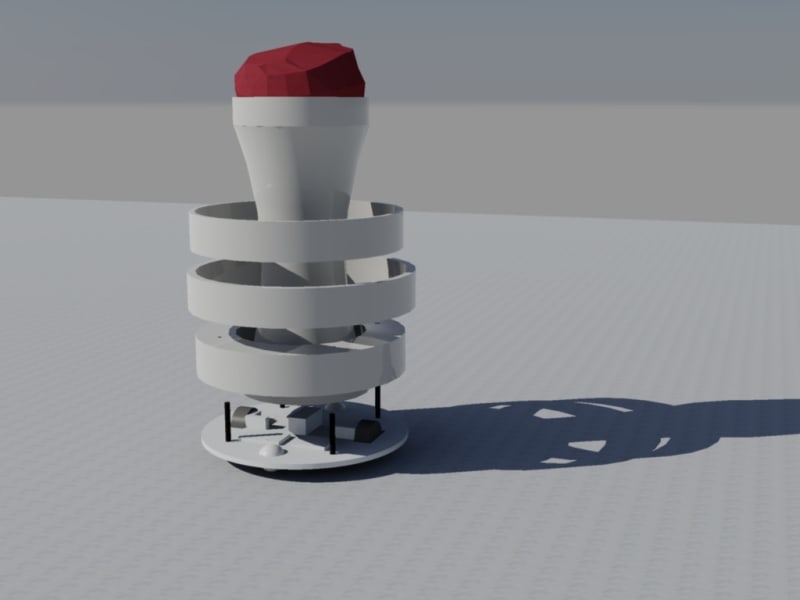

Sangi is a low cost robot which can perform localization and mapping of the environment without using any range sensors or vision. Our robot Sangi will navigate around the house while carrying blind or disabled people to different locations.

Our end product will work in the following way:

1. A blind person connects his bluetooth headset to Sangi robot and gives initiation command.

2. The robot starts initiating by randomly colliding very softly with nearby obstacles and after a few of these soft collisions, it gets a good estimate of its position in the indoor map. Robot is now ready to accept goals via voice commands.

3. Blind person sits on this robot and gives a goal location like: 'Kitchen' or 'toilet' or 'courtyard.'

4. The robot interprets the command and asks for verification to know if the interpreted command is correct.

5. The robot then autonomously carries the blind person to the goal location while avoiding all static and dynamic obstacles.

The main deliverables we wanted to focus on in Project Sangi are:

> Very low cost and affordable to the end consumer.

> Intelligent enough to navigate indoor environments without any guidance and completely autonomous.

> Robust voice assisted and speech recognition system to bring a nice UI for the end user.

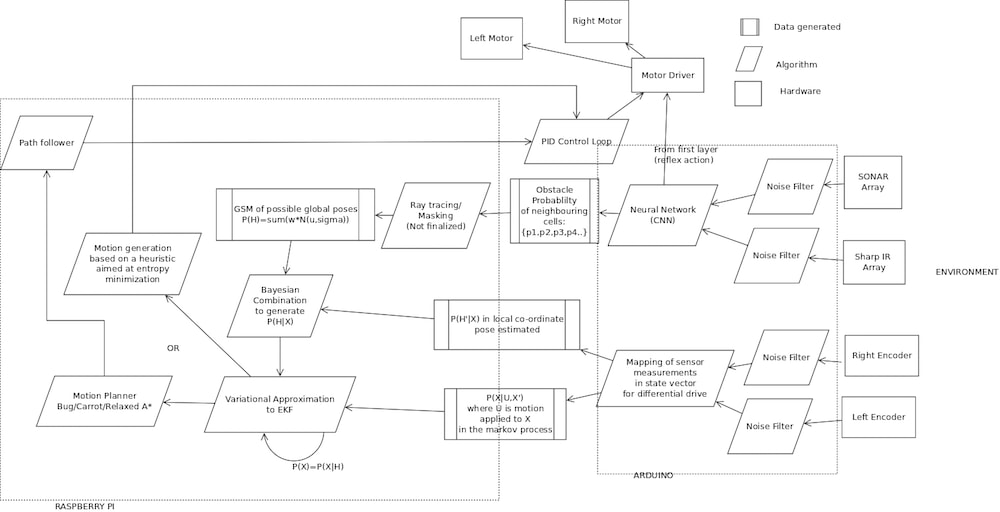

To achieve our goals, the technical approach that we took to reach our goals are:

> SONAR and highly accurate odometry based localization: To bring down the cost of our design, we could not use any LIDARs or depth sensors like Kinect for gaining information about the environment. So, we had to resort to SONARs only but SONARs are very inaccurate, have low range and is susceptible to a lot of noise. To overcome all these, we developed a SONAR profiling algorithm based on Machine Learning techniques to increase its accuracy.

> Blind navigation algorithm: We developed a highly robust algorithm which can work with intuition and localize even when sensor data is very minimal. This algorithm uses a active localization technique and a heuristic based motion choosing technique to provide the best estimate of the robot's pose with minimum sensor dependency. This algorithm gives a navigation similar to turtlebots or other standard navigation platform without the need for any depth sensor like Kinect.

> Voice Interaction System: We used a UOL based system and a designed a speech recognition used using a suffix-string based data structure to increase speed of search. Then, using decision trees speech recognition has been done. A lot of third-party open source libraries has been used in this part but we got a fairly accurate speech recognition that is quite robust. Moreover, every voice command is checked with the user before execution to guarantee safety.

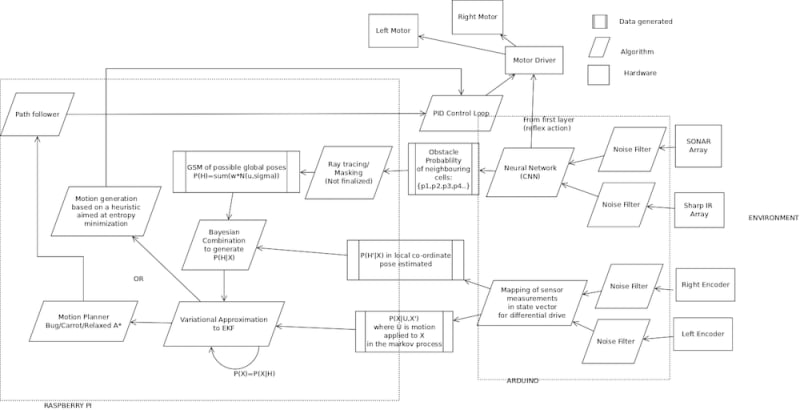

The overall software structure that we thought of and are trying to implement is given.

Like this entry?

-

About the Entrant

- Name:Shubhojyoti Ganguly

- Type of entry:teamTeam members:Shubhojyoti Ganguly

Sravani Rao

Srinivas Rao

Ishika Roy

Gopeshh Raaj - Software used for this entry:Gazebo, AutoCAD, ROS

- Patent status:none