Life expectancy is projected to increase in the next 10 years, resulting in a growth of the population of adults over the age of 65. The change is imperative to improve quality of life and promote healthy aging, with the feelings of independence and emotional support by care robots. Emotion recognition is expected as a promising solution for care robots not only for the aged but also for babies, who also demand independence and emotional support. Facial emotion recognition may be used for other purposes of improving quality of life or securing the safety, in healthcare, in any field that requires an in-depth understanding of the human emotion. The global emotion recognition market is now projected to grow to over USD 40 billion by 2027.

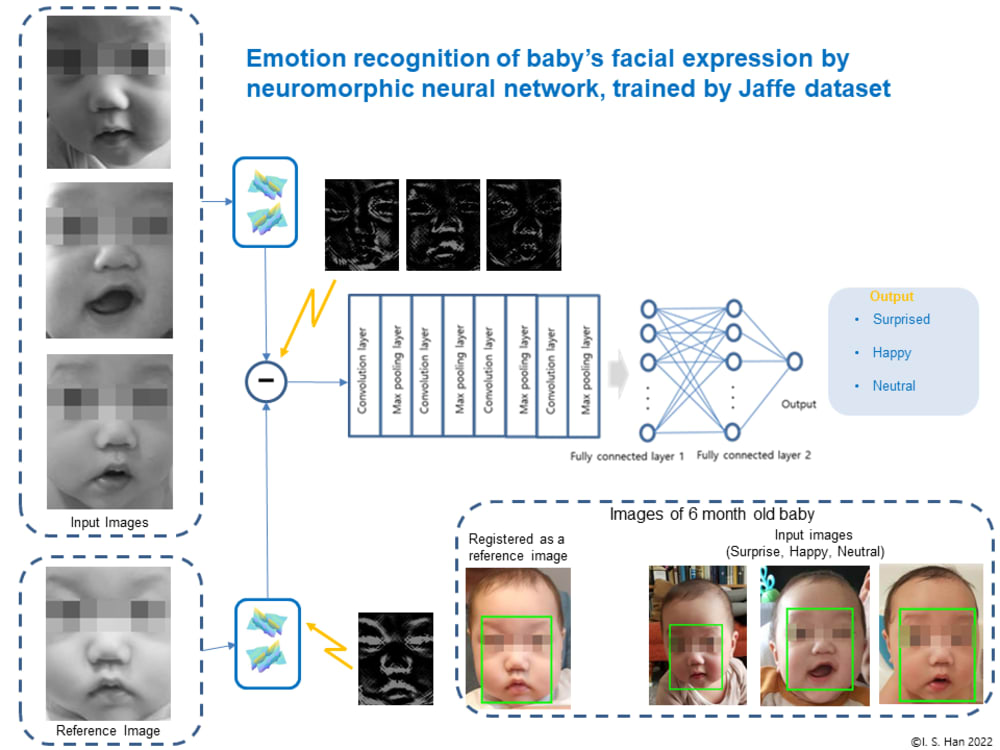

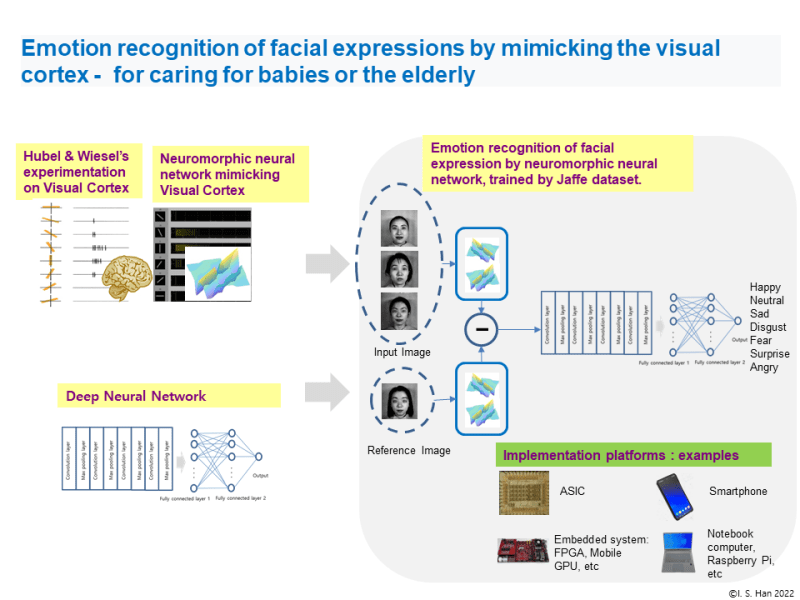

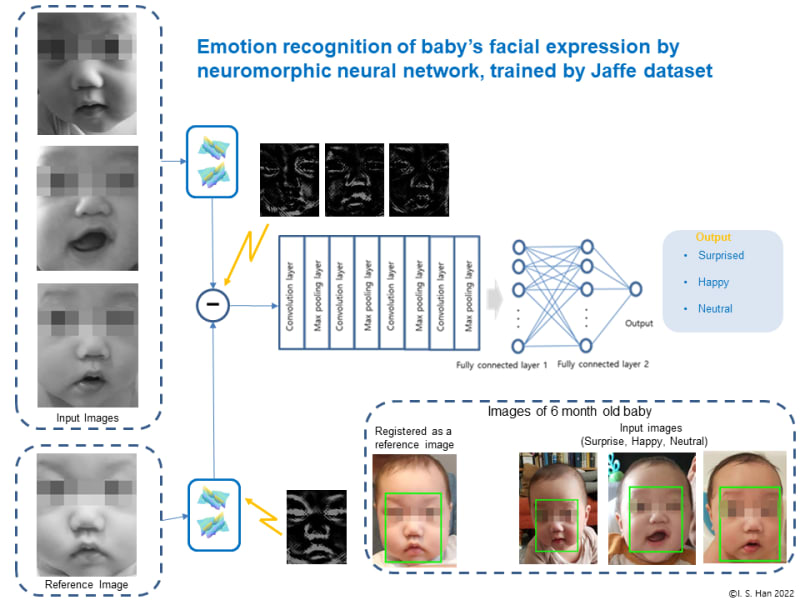

The new design of the neuromorphic neural network is inspired as an alternative of the primary visual cortex function, the orientation tuned response from Hubel and Wiesel’s experimentation, as in Illustration 1. The neuromorphic neural network, mimicking the visual cortex neuron, extracts the visual features of facial expression and assesses the difference of input image and the registered face (neutral emotion). The feasibility of the neuromorphic approach was already tested for road safety improvement. The neuromorphic deep neural network is trained in emotion recognition using Jaffe (female face dataset). The illustration 1 depicts the novelty of neuromorphic feature extraction from the facial expression and the deep learning enhancement based on the differential processing of neuromorphic features. It enables the robust emotion recognition of baby’s face expression as shown in illustration 2, which has never been trained. The baby’s facial images have been collected from a 6 month old baby boy, who is presumed to start developing emotional expression. It works by registering a user’s face (baby or an aged), while the input image can be applied via a webcam or other image sensor. The region of interest is decided after detecting eyes, with an area of 110x135 pixels. The early prototype was implemented in a notebook computer. The prototype of neuromorphic emotion recognition shows the performance of 95% accuracy and the speed of 5 emotion recognition/sec.

The feasibility of implementation has been verified for the platforms of Xilinx EVM, Nvidia mobile GPU, and Raspberry Pi, as in Illustration 1. The product would be manufactured in various cost-effective ways depending on the scale and performance requirement. The product can be manufactured by designing an ASIC or customizing an embedded system. The product can also be based on a smartphone or Raspberry Pi (or similar), for a particular technical performance or the business model. For the integration with other products or services, a notebook computer (or similar) can be used for manufacturing the product.

Like this entry?

-

About the Entrant

- Name:Il Song Han

- Type of entry:individual

- Software used for this entry:MATLAB, Python

- Patent status:none