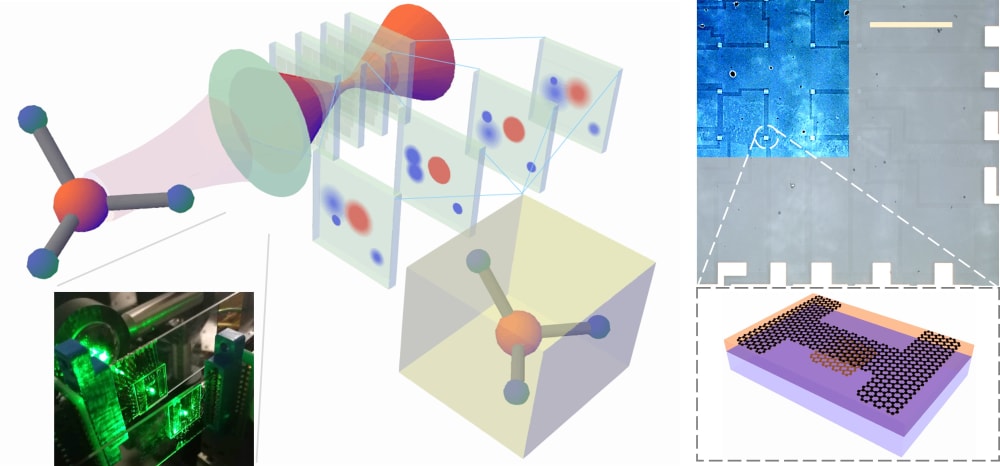

Transparent imaging stack integrated into a camera offers new 3D sensing solutions in the Internet of things (IoT) technologies. The camera simultaneously captures multiple images at different focal depths. The images are used to reconstruct 3D object configurations by a neural network algorithm for real-time 3D motion tracking.

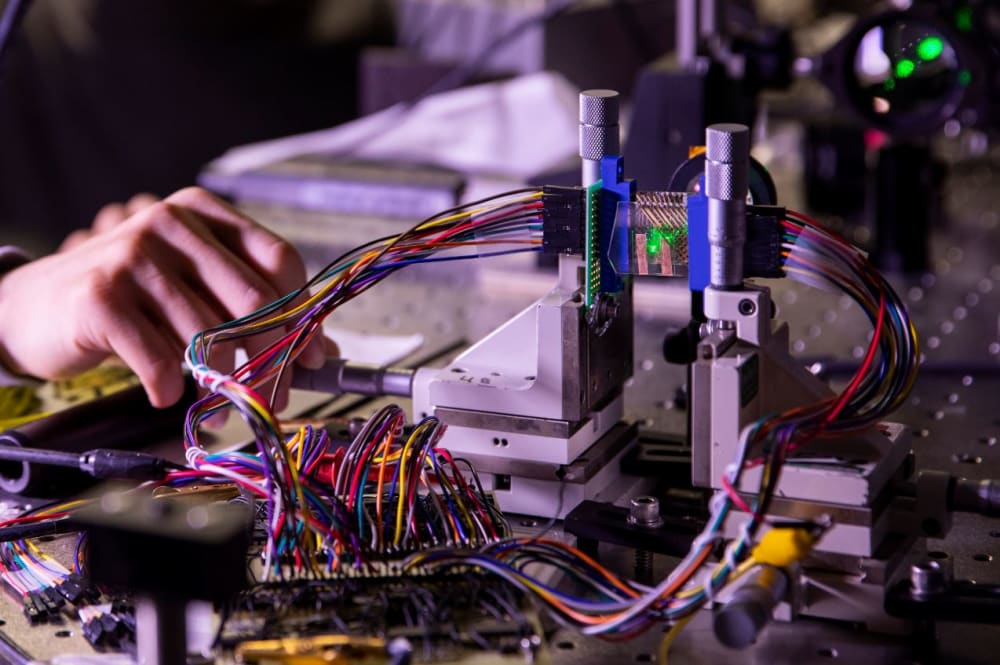

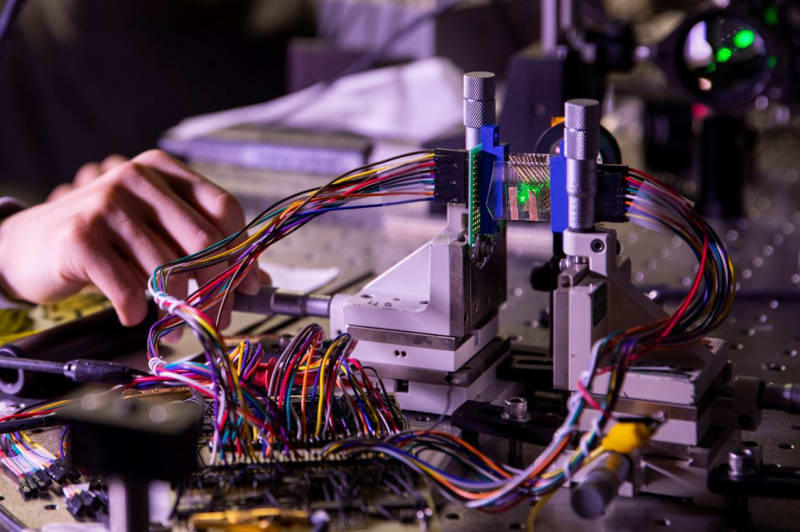

The imaging system exploits the advantages of transparent, highly sensitive graphene photodetectors. The graphene detectors absorb less than 10% of the light they are exposed to, making them nearly transparent. Meanwhile, the device is sufficiently sensitive as detectors and scalable to build image sensors. A stack of transparent imaging sensor replaces the conventional single-plane CMOS sensor in the camera. When pictures are taken, light transmits through multiple transparent imaging sensors at different focal depths, forming an image at each layer. These images, commonly called focal stack, contain depth-dependent defocus blur and encode 3D information of objects, which an artificial neural network can decipher.

The prototype includes a stack of two 4x4 (16-pixel) transparent photodetector arrays. The transparent imaging system demonstrates successful 3D tracking of one-point and few-point objects. Further scaling up of the system is promising, with a 99% yield of 192 fabricated devices and the decent transparency that allows more imaging planes in the stack. The machine learning algorithm also precisely detects a real ladybug’s 3D position and orientation using a higher resolution focal stack.

The transparent imaging stack has unique advantages over existing 3D sensing technologies:

- The imaging stack is so compact that it can be integrated into commercial mobile phone cameras.

- The approach is also cheaper, safer, and more energy-efficient than LiDAR since it does not rely on active scene illumination by lasers.

- The parallel image capture from multiple depths and the high inference speed of neural networks make a very high frame rate possible for real-time motion tracking. Similar performance is challenging for depth sensing technologies with scanning laser beams.

The future IoT has great demands on 3D motion-tracking technologies. Autonomous vehicles, robots, and new manufacturing infrastructures need close interaction with the 3D world around them. The compact system provides an easy solution for various IoT technologies to see things in 3D. Besides the broad and giant potential market, the new 3D sensing system also paves the way to a better future. 3D vision-based robotics and biomedical technologies can help us in future natural disasters, including earthquakes and pandemics.

This technology is made possible by a synergic design of the nanophotonic hardware and the machine learning algorithm. Nanophotonic engineering of device properties produces transparent, sensitive image sensors, while neural networks convert the unique hardware readouts to useful 3D information. The novel concept in software and nanodevice design could lead to more novel applications exploiting such interdisciplinary innovations. The preliminary results were published in distinguished journals (Nature Photonics, 2020, 14(3): 143-148. and Nat Commun 12, 2413 (2021).). It was also recognized by widespread scientific and industrial news platforms, including Tech Briefs, Phys.org, Scienmag, and other media in 12 news outlets.

-

Awards

-

2021 Top 100 Entries

2021 Top 100 Entries

Like this entry?

-

About the Entrant

- Name:Dehui Zhang

- Type of entry:teamTeam members:Prof. Theodore B. Norris, Prof. Zhaohui Zhong, Prof. Jeffrey A. Fessler, Dr. Dehui Zhang, Mr. Zhengyu Huang, and Mr. Zhen Xu

- Patent status:none