Over 38,000 people die annually in automobile accidents in the U.S. alone with a society cost of over 700 billion. Machine vision commanding self-driving vehicles can have a dramatic reduction and almost eliminate human-caused accidents.

Autonomous Vehicles are an exciting and promising new frontier that will dramatically reshape the modern world. Major automotive and technology companies have invested billions of dollars to advance the direction of Self-driving vehicles. Key to this robotic automation are new sensor technologies: video, radar, lidar, and others that are supposed to provide the digital model of the surrounding environment key to automated navigation. Epilog has introduced the world’s first 8K Smart Machine Vision for Self-driving vehicles.

Real-world driving happens in all kinds of environments: different road conditions, different weather conditions, all kinds of traffic density, vehicles traveling and any kinds of speeds. Add to this the various distractions inside and outside the vehicle and it becomes a marvel that humans with simple eyes and brains can accomplish this complex navigation.

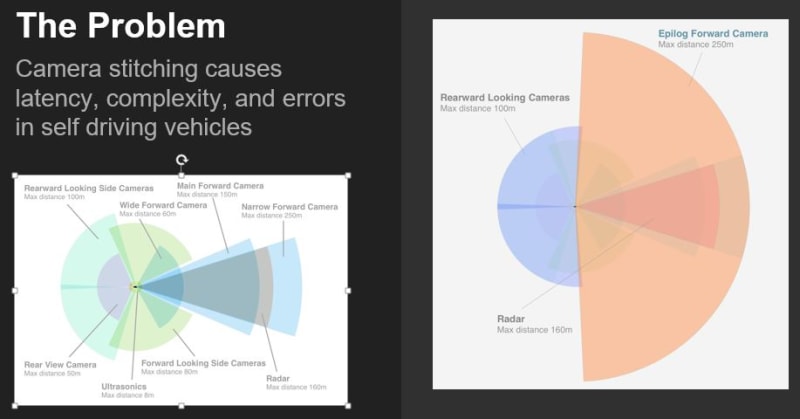

Current vehicle sensor deployments are primitive, too expensive and not accurate enough to ensure consistent, efficient, safe Self-driving. Human drivers only use their eyes and brain to quite successfully command vehicles. Today’s Autonomous Vehicles try to compensate using multiple cameras, lidars, radars, and other sensors but have not nearly approached true Level 4/Level 5 autonomy and simply will not scale for mass consumer deployment.

Having recognized the technology gaps, Epilog has developed a disruptive new camera technology that captures seamless high-resolution, scalable, ultra-fast video by tiling image sensors behind a single lens. Epilog additionally integrates native Neural Networking capability (scaled with resolution) to manage increases in data flow. This new computational camera captures extremely high-resolution real-time video and processes the object stream within the edge camera.

Extraordinary advances in machine learning and neural networks have made possible more complex algorithms and higher resolution image processing onboard the Self-driving vehicle. Current camera technology has not nearly kept pace, video onboard the vehicle is typically based on HD/4K camera modules, VGA quality Lidar and thermal sensors.

Epilog’s video imaging technology replaces pods of multiple cameras and lidars with a single panoramic 180o view super-high resolution (at least 8K), high speed, inexpensive video camera. This approach provides a much more accurate view of the vehicle’s environment, making object recognition and identification safer. At the same time manufacturing and TCO complexities are greatly reduced. Current autonomous vehicle sensor stacks can cost $150,000 on top of the vehicle cost. Epilog replaces this with a computational camera for hundreds of dollars.

Epilog’s solution uses off-the-shelf, inexpensive sensors and powerful processors combined with optical software technology (9 patents) to deliver superior machine vision for mass markets. Epilog is in early testing and POC with several vehicle and Tier 1 automotive OEMs.

www.epilog.com

Patent information upon demand

Video

-

Awards

-

2019 Top 100 Entries

2019 Top 100 Entries

Like this entry?

-

About the Entrant

- Name:Marc Munford

- Type of entry:teamTeam members:Epilog Imaging Systems Inc.

- Patent status:patented