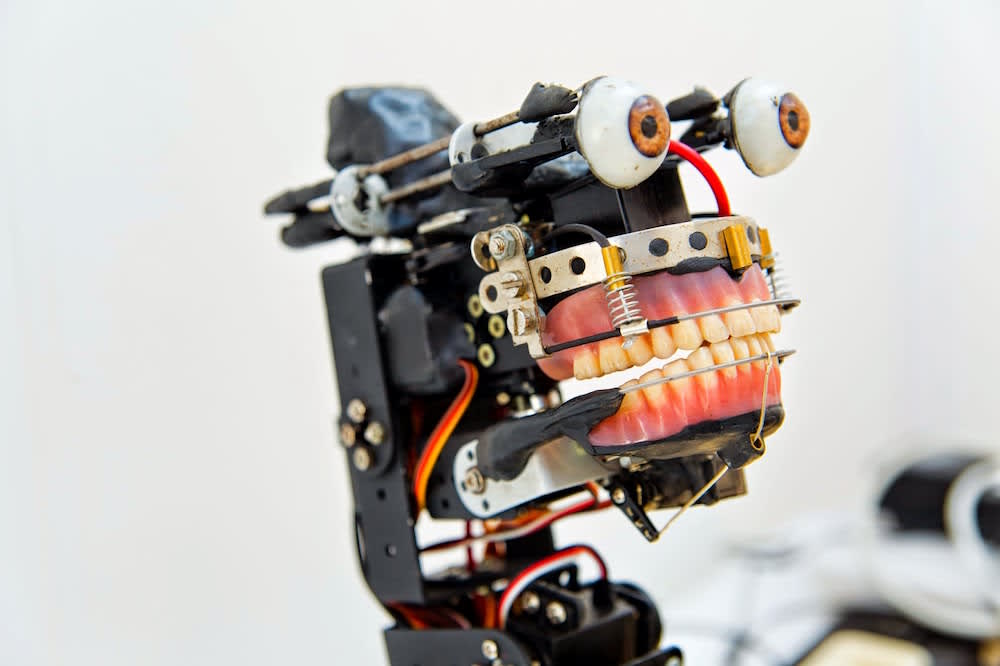

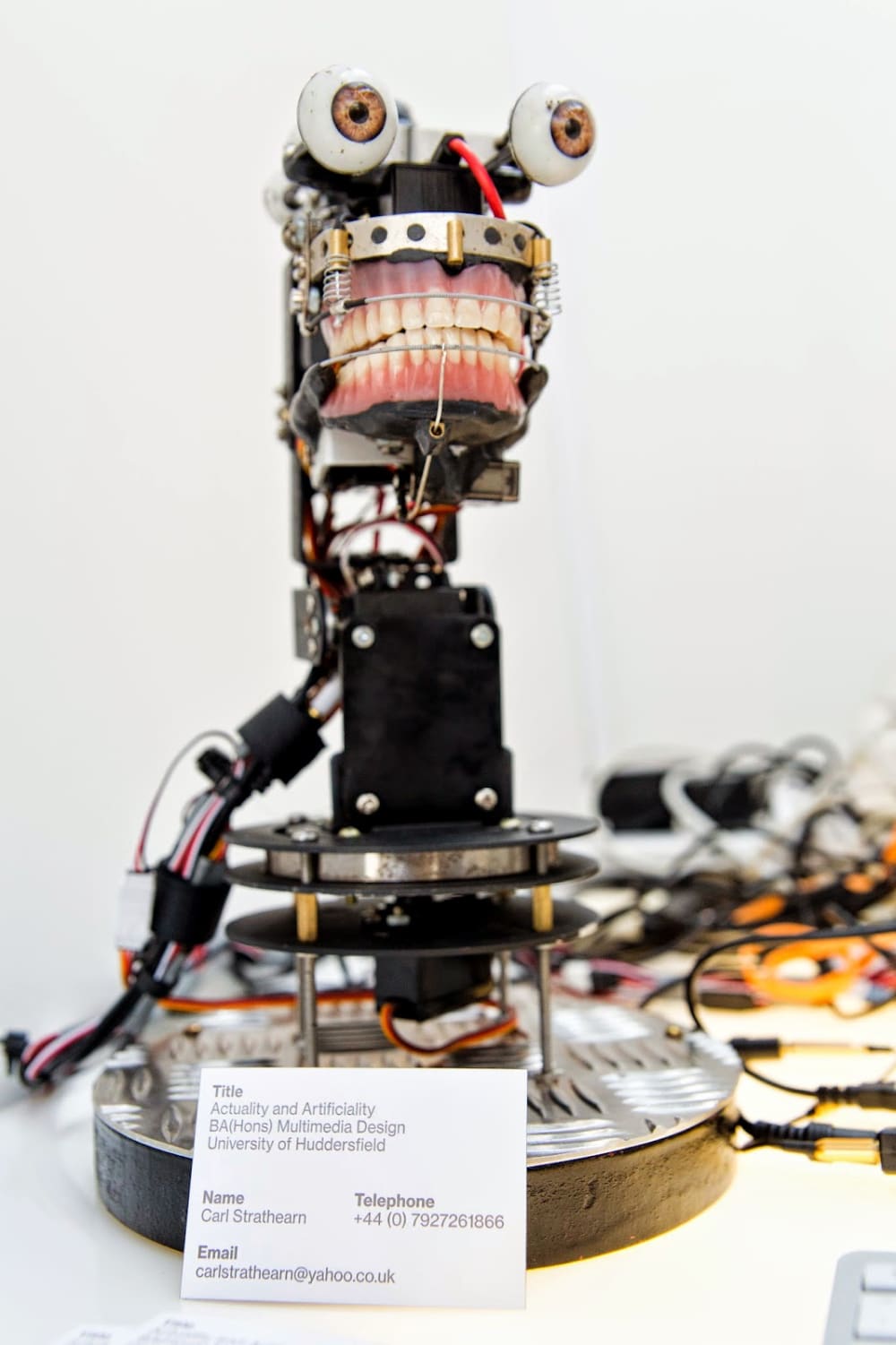

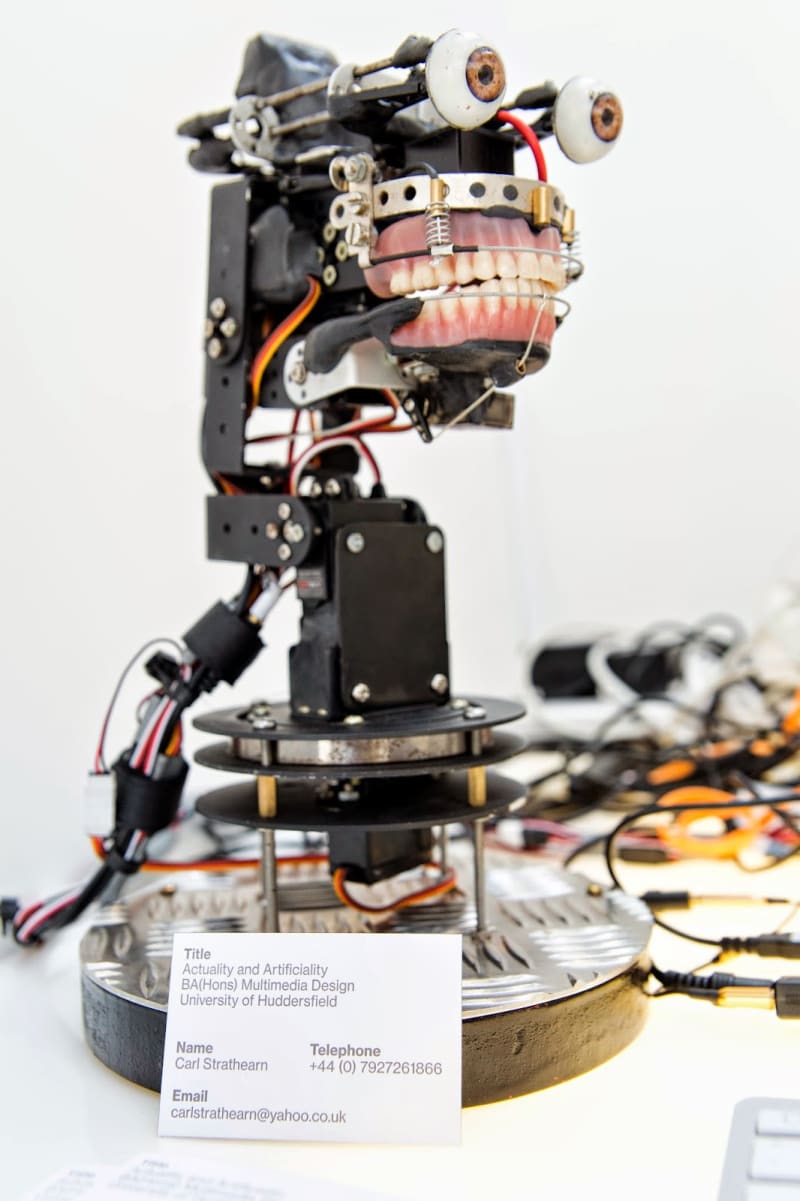

Egor v.2 can track movement and respond to questions via a wireless keyboard. However, voice recognition can be implemented using Macs dictation and speech function in system preferences in-putted via the Xbox Kinect's internal quad mic set up. The robot uses an adapted version of the Eliza algorithmic framework (Siri derivative) to respond to participant questions, The 'script' is then out-putted to Apple scripts' voice modulator so it can be heard through the robot's internal speaker system.

The application of the project is as an interactive exhibit, but this project is highly adaptable and would be suited for museum displays, help desks or interactive theatre. The Eliza framework can be changed to mimic any individual or language (currently based on Marvin from Hitchhikers Guide to the Galaxy) and also answer complex questions, making this a highly interactive and knowledgeable system. The voice can also be outputted and modelled on specific individuals and the mouth and lips react to the sound coming into the computer's audio output so it is always more or less in time. The system tracks peoples movement via a Kinect module, the current system uses an open software library that tracks the nearest pixel to the sensor, however, the script also includes skeleton tracking output which can be activated via un-commenting the skeleton tracking code and commenting the point tracking library. This means that multiple individuals can be tracked and interacted with at once, allowing for larger audiences.

Video

Like this entry?

-

About the Entrant

- Name:Carl Strathearn

- Type of entry:individual

- Software used for this entry:arduino, processing, applescript

- Patent status:none